Apple recently announced that it will introduce a new photo scanning feature for the iPhone. The feature will basically scan photos stored on the iPhone to track child abuse.

This new feature does sound like it has a very good purpose. Apple claims the steps taken by the company to limit the spread of child sexual abuse material (CSAM).

However, the photo scanning system can scan all private photos on the user's phone so that it can be misused by the government and bad actors.

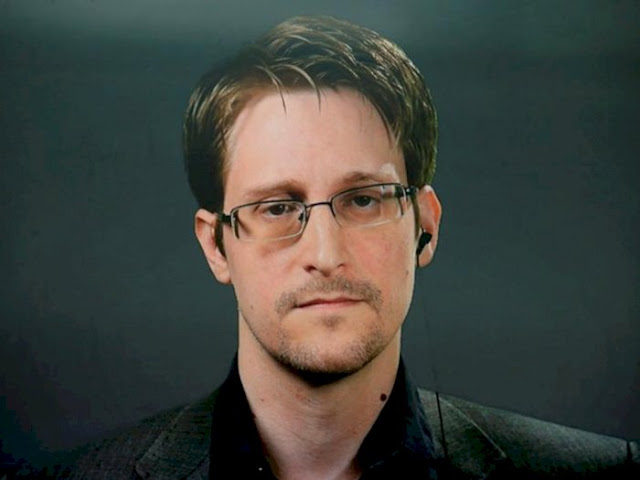

Many experts consider this to be a major problem. Former NSA consultant Edward Snowden was one of those who denounced the new feature, claiming that while well-intentioned, Apple was essentially rolling out mass surveillance around the world.

"No matter how well-intentioned, Apple is rolling out mass surveillance worldwide through this feature. Make no mistake: if they can scan child porn today, they can scan anything tomorrow," Snowden tweeted as reported by Ubergizmo, Sunday (8/8/). 2021).

|

| Edward Snowden |

Meanwhile, Daniel Bostic, who is known as an actor and activist, said if the photo scanner feature can be used to identify child abuse photos, who can guarantee that the government will not take advantage of this feature.

"Apple's photo scanner system will likely be used to identify anti-government photos," he said.

WhatsApp Boss Criticism

Previously the Head of WhatsApp, Will Cathcart, assessed that Apple had taken the wrong way regarding the latest 'Child Safety' feature which aims to protect children from sexual abuse.

Apple claims the new feature announced on Thursday (5/8/2021) is to limit the spread of Child Sexual Abuse Material (CSAM).

|

| Will Cathcart |

However, Cathcart rated Apple's approach to be 'deeply concerning', in that Apple involves retrieving hashes of images uploaded to iCloud and comparing them to a database containing known CSAM images. This is as reported by The Verge, Sunday (8/8/2021).

This so-called hashing system allows Apple to store encrypted user data and run analytics on devices while still allowing the company to report users to authorities if they are found to be sharing child abuse images.

In a tweet, Cathcart said Apple "has built software that can scan all private photos on a user's phone".

"I read the information Apple released yesterday and I am concerned. I think this is the wrong approach and a setback for the privacy of people around the world," he tweeted.

"People are asking if we will adopt this system for WhatsApp. The answer is no," Cathcart insists.